The Future of Computing: What’s Next Central Processing Unit News?

Central Processing Units (CPUs) have come a long way since their inception. In the early days of computing, CPUs were simple and limited in their capabilities. However, as technology advanced, so did the design and functionality of CPUs. Today, CPUs are at the heart of every computing device, from smartphones to supercomputers.

The importance of CPUs in computing cannot be overstated. They are responsible for executing instructions and performing calculations, making them the brain of any computer system. Without CPUs, computers would be unable to process data and perform tasks.

The Rise of Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) have emerged as game-changers in the world of technology. These fields involve the development of algorithms and models that can learn from data and make intelligent decisions. As AI and ML applications become more prevalent, the demand for advanced CPUs has increased.

AI and ML applications require CPUs that can handle complex calculations and process large amounts of data quickly. Traditional CPUs are not optimized for these tasks, as they are designed for general-purpose computing. As a result, companies like Intel and AMD have started developing specialized CPUs for AI and ML workloads.

Examples of AI and ML applications that require advanced CPUs include image recognition, natural language processing, and autonomous vehicles. These applications rely on deep learning algorithms that require massive amounts of computational power. Advanced CPUs with specialized instructions and architectures are needed to accelerate these computations and deliver real-time results.

Quantum Computing: The Next Frontier

Quantum computing is an emerging field that has the potential to revolutionize computing as we know it. Unlike traditional computers that use bits to represent information, quantum computers use quantum bits or qubits. Qubits can exist in multiple states simultaneously, allowing quantum computers to perform calculations at an exponential speed.

The current state of quantum computing is still in its early stages. While there have been significant advancements in the field, practical quantum computers that can outperform traditional computers are still a long way off. One of the main challenges in developing quantum computers is maintaining the stability of qubits, as they are highly sensitive to external disturbances.

Despite these challenges, quantum computing holds great promise for solving complex problems that are currently intractable for classical computers. For example, quantum computers could be used to optimize complex logistical problems, simulate molecular interactions, and break encryption algorithms. The development of quantum computers will require advanced CPU technology to support the unique requirements of quantum computing.

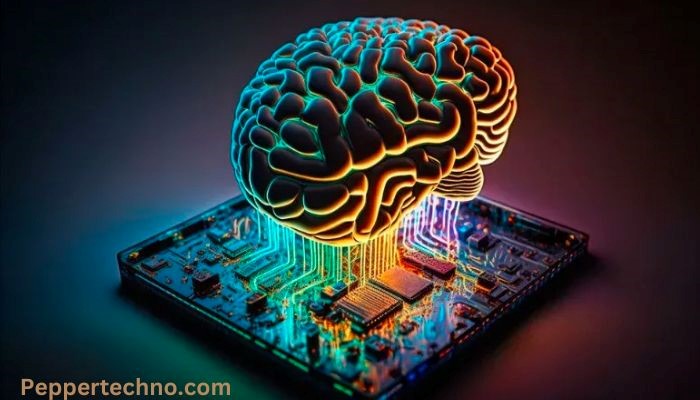

Neuromorphic Computing: Mimicking the Human Brain

Neuromorphic computing is a branch of computer science that aims to mimic the structure and functionality of the human brain. Traditional CPUs are based on the von Neumann architecture, which separates memory and processing units. In contrast, neuromorphic computing seeks to integrate memory and processing units, similar to how the human brain operates.

Neuromorphic computing has the potential to create more efficient CPUs that can perform complex tasks with lower power consumption. By mimicking the brain’s neural networks, neuromorphic CPUs can process information in parallel and adapt to changing conditions. This makes them well-suited for tasks such as pattern recognition, sensory processing, and robotics.

One example of a neuromorphic computing application is in the field of robotics. Neuromorphic CPUs can enable robots to perceive and interact with their environment in a more human-like manner. This could lead to advancements in areas such as autonomous navigation, object recognition, and natural language understanding.

The Emergence of Edge Computing

Edge computing is a paradigm shift in computing that brings processing power closer to the source of data generation. In traditional cloud computing, data is sent to remote servers for processing and analysis. With edge computing, data is processed locally on devices or at the edge of the network, reducing latency and improving real-time decision-making.

The impact of edge computing on CPU technology is significant. CPUs used in edge devices need to be powerful enough to handle real-time processing and analysis of data. They also need to be energy-efficient, as many edge devices are battery-powered and have limited resources.

Advantages of edge computing include reduced latency, improved reliability, and increased privacy and security. For example, in autonomous vehicles, edge computing can enable real-time decision-making without relying on a remote server. This can improve safety and responsiveness in critical situations.

However, there are also challenges associated with edge computing. One challenge is the limited computational resources available on edge devices. CPUs used in edge devices need to strike a balance between performance and power consumption. Additionally, managing and securing a distributed network of edge devices can be complex.

The Impact of 5G on CPU Technology

The rollout of 5G technology is set to revolutionize the way CPUs are designed and used. 5G promises faster speeds, lower latency, and increased capacity compared to previous generations of wireless technology. This opens up new possibilities for CPU-intensive applications that require real-time processing and analysis of data.

One example of a 5G application that requires advanced CPUs is autonomous vehicles. With 5G, vehicles can communicate with each other and with infrastructure in real-time, enabling safer and more efficient transportation. Advanced CPUs are needed to process the massive amounts of data generated by sensors and make split-second decisions.

Another example is virtual reality (VR) and augmented reality (AR). 5G can provide the high bandwidth and low latency required for immersive VR and AR experiences. Advanced CPUs are needed to render high-resolution graphics and track user movements in real-time.

The Role of CPUs in the Internet of Things (IoT)

The Internet of Things (IoT) refers to the network of interconnected devices that can communicate and exchange data. IoT devices range from simple sensors to complex industrial machinery. CPUs play a crucial role in IoT, as they are responsible for processing and analyzing the data generated by these devices.

Examples of IoT applications that require advanced CPUs include smart homes, industrial automation, and healthcare monitoring. In a smart home, CPUs are used to control and manage various devices, such as thermostats, security cameras, and appliances. In industrial automation, CPUs are used to monitor and control manufacturing processes. In healthcare monitoring, CPUs are used to collect and analyze patient data in real-time.

The challenge with IoT is the sheer scale of devices and the amount of data generated. CPUs used in IoT devices need to be energy-efficient and capable of processing data quickly. They also need to be secure, as IoT devices are often vulnerable to cyberattacks.

Security Concerns and the Future of CPU Design

Security concerns related to CPUs have become increasingly prevalent in recent years. One example is the Spectre and Meltdown vulnerabilities, which affected a wide range of CPUs. These vulnerabilities allowed attackers to access sensitive data stored in the memory of a computer system.

In response to these security concerns, CPU design is evolving to address these vulnerabilities. New CPU architectures are being developed that incorporate security features, such as hardware-based encryption and isolation of sensitive data. Additionally, software patches and updates are being released to mitigate the risk of these vulnerabilities.

The future of CPU design will likely focus on improving security without sacrificing performance. This will involve a combination of hardware and software solutions to protect against both known and unknown vulnerabilities. As the threat landscape evolves, CPU manufacturers will need to stay ahead of attackers and continuously innovate to ensure the security of their products.

The Future of Cloud Computing and CPUs

Cloud computing has transformed the way CPUs are used and designed. With cloud computing, computing resources are delivered over the internet on-demand. This allows businesses and individuals to access powerful CPUs and storage without the need for expensive hardware.

The advantages of cloud computing include scalability, cost-efficiency, and flexibility. CPUs used in cloud computing need to be able to handle a wide range of workloads and be highly scalable. This has led to the development of specialized CPUs for cloud computing, such as Amazon’s AWS Graviton processors.

However, there are also challenges associated with cloud computing. One challenge is the reliance on internet connectivity. Without a stable internet connection, access to cloud resources can be disrupted. Another challenge is data privacy and security. Storing data in the cloud raises concerns about unauthorized access and data breaches.

Conclusion: The Endless Possibilities of CPU Technology

CPUs have evolved significantly over the years and continue to play a crucial role in computing. The rise of AI and ML, quantum computing, neuromorphic computing, edge computing, 5G, IoT, and cloud computing are all driving the need for advanced CPUs.

The future of CPU technology holds endless possibilities. From faster and more efficient CPUs for AI and ML applications to quantum computers that can solve complex problems, the potential for innovation is immense. However, with these advancements come challenges, such as security concerns and the need for energy-efficient designs.

As technology continues to advance, CPUs will continue to evolve to meet the demands of the ever-changing computing landscape. The future of CPUs is exciting, and it will be fascinating to see how they shape the future of computing as a whole.