Types of Reinforcement learning: Understanding

Embarking on the exciting journey into the realm of artificial intelligence, one quickly encounters a fascinating concept known as reinforcement learning. Imagine teaching a computer to make decisions and take actions based on trial and error, much like how we learn from our experiences. This is where types of reinforcement learning comes into play – offering machines the ability to learn and adapt through interaction with their environment. In this blog post, we will delve into the diverse types of reinforcement learning strategies that shape the future of AI applications. So buckle up as we explore the dynamic world of reinforcement learning together!

The Different Types of Reinforcement Learning

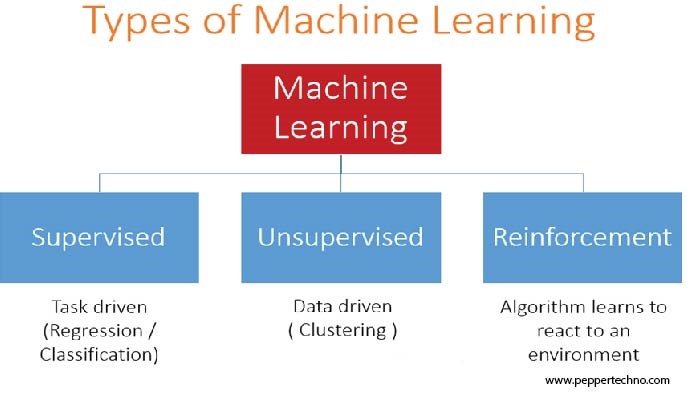

When it comes to reinforcement learning, there are various types that play a crucial role in how algorithms learn and make decisions. One of the fundamental distinctions is between model-based and model-free reinforcement learning.

Model-based methods involve building a representation of the environment to plan actions, while model-free approaches directly learn from experience without explicitly modeling the environment.

Another essential type is value-based reinforcement learning, where the agent evaluates different actions based on their expected long-term rewards. This method helps in selecting the most advantageous course of action at any given time.

Policy-based reinforcement learning focuses on directly optimizing the policy or strategy followed by an agent to maximize cumulative rewards over time. On the other hand, Actor-Critic methods combine both value estimation and policy improvement for more stable and efficient learning.

Each type has its strengths and weaknesses, making it essential to choose wisely based on specific requirements and applications.

Model-based vs. Model-free reinforcement learning

When exploring the realm of reinforcement learning, one crucial distinction to understand is between model-based and model-free approaches. In model-based reinforcement learning, an agent learns a predictive model of the environment to make decisions. This involves understanding how actions will impact future states through simulations or planning methods. On the other hand, in model-free reinforcement learning, the focus shifts towards directly learning a policy or value function without explicitly modeling the environment dynamics. This approach often relies on trial-and-error experiences to optimize decision-making.

Model-based methods can be advantageous when dealing with environments that have predictable dynamics as they can plan ahead more efficiently. Conversely, model-free methods are more flexible and robust in uncertain or complex environments where explicit modeling may not be feasible or accurate enough. The choice between these two approaches ultimately depends on factors like task complexity, available data, and computational resources in your specific reinforcement learning context.

Value-based reinforcement learning

Value-based reinforcement learning focuses on estimating the value of different actions in a given state. This type of RL algorithm aims to maximize the cumulative reward by selecting the action that leads to the highest expected return.

In value-based RL, an agent learns a value function that assigns a value to each possible action based on its predicted long-term outcome. The agent then selects the action with the highest estimated value in each state it encounters during training or testing.

Popular algorithms like Q-learning and Deep Q-Networks (DQN) fall under this category, where they iteratively improve their estimates of action values through trial and error interactions with the environment.

By focusing on maximizing expected rewards, value-based RL provides a framework for learning optimal policies without explicitly modeling them, making it suitable for complex decision-making tasks with large state-action spaces.

Policy-based reinforcement learning

Policy-based reinforcement learning focuses on directly learning the optimal policy that maps states to actions. Instead of estimating values like in value-based methods, policy-based algorithms aim to find the best way to act in each state without explicitly modeling the expected rewards.

By parameterizing a policy and updating it based on maximizing expected cumulative rewards, these algorithms can handle both discrete and continuous action spaces more effectively than some other approaches. This method is particularly useful in complex environments where it’s challenging to estimate accurate value functions or when dealing with high-dimensional action spaces.

Policy gradient methods, a popular technique in policy-based reinforcement learning, use gradients from reward signals to update the policy parameters incrementally. They offer stability advantages over value-function approximation by directly optimizing for the ultimate goal: finding an optimal strategy.

Actor-Critic reinforcement learning

In the realm of reinforcement learning, Actor-Critic stands out as a dynamic approach that combines the strengths of both value-based and policy-based methods. This unique technique involves two distinct components working in tandem: the actor, responsible for selecting actions, and the critic, providing feedback on those actions. The actor learns which actions to take through trial and error, while the critic evaluates these decisions based on predefined rewards. By collaborating closely, they enhance each other’s performance iteratively.

The Actor-Critic method is particularly effective in scenarios where real-time decision-making is crucial. Its ability to adapt quickly to changing environments makes it well-suited for tasks like game playing or robotic control systems. Furthermore, this approach offers a balance between exploration and exploitation by continuously adjusting its strategies based on feedback received from the environment.

Actor-Critic reinforcement learning represents an innovative solution that holds great promise for tackling complex problems in various fields.

Applications of Reinforcement Learning

Reinforcement learning has found its way into various real-world applications, showcasing its versatility and power in solving complex problems. One prominent area where reinforcement learning shines is in robotics. By training robots through trial and error, they can learn to perform tasks like grasping objects or navigating environments autonomously.

In the field of healthcare, reinforcement learning plays a crucial role in personalized treatment recommendations. By analyzing patient data and treatment outcomes, algorithms can suggest tailored therapies for better medical interventions.

Another fascinating application is in finance, where reinforcement learning is used for stock market prediction and algorithmic trading strategies. By continuously adapting to market dynamics, these systems can make informed decisions to optimize investment portfolios.

Moreover, reinforcement learning has made significant strides in autonomous driving technology by enabling vehicles to learn from their environment and make safe driving decisions on the road.

Choosing the right type for your needs

When it comes to choosing the right type of reinforcement learning for your needs, it’s essential to consider factors such as the complexity of your problem, the availability of data, and the desired outcomes.

If you have a well-defined environment and sufficient data, model-based reinforcement learning might be suitable for more structured problems. On the other hand, if you’re dealing with uncertainty and limited data, model-free approaches like value-based or policy-based methods could be more effective.

Value-based reinforcement learning focuses on estimating the value of taking different actions in a given state. Policy-based methods directly learn an optimal policy without explicitly estimating value functions. Actor-Critic combines elements from both approaches by having separate networks for policy (actor) and value estimation (critic).

Understanding the nuances of each type will help you make an informed decision based on your specific requirements and goals.

Conclusion

In the vast realm of reinforcement learning, understanding the different types is crucial for success. Model-based and model-free approaches offer distinct advantages, while value-based and policy-based methods provide unique ways to optimize decision-making. The Actor-Critic model combines the best of both worlds by leveraging strengths from each approach.

As you delve into the world of reinforcement learning, consider your specific needs and goals when choosing a method. Whether you prioritize efficiency, accuracy, or scalability, there is a type of reinforcement learning that suits your requirements.

By exploring these various types of reinforcement learning and their applications, you can harness this powerful technology to solve complex problems in diverse fields like robotics, gaming, finance, healthcare, and more. Stay curious and keep experimenting with different techniques to unlock new possibilities in this exciting field!